This file documents the usage of GNU/Hurd. This edition of the documentation was last updated for version 0.3 of the Hurd.

The GNU Hurd is the GNU Project's replacement for the Unix kernel. The Hurd is a collection of servers that run on the Mach microkernel to implement file systems, network protocols, file access control, and other features that are normally implemented by the Unix kernel or similar kernels such as Linux.

This manual is designed to be useful to everybody who is interested in using or administering the a GNU/Hurd system.

If you are an end-user and you are looking for help on running the Hurd, the first few chapters of this manual describe the essential parts of installing, starting up, and shutting down a GNU/Hurd workstation. Subsequent chapters describe day-to-day use of the system, setting up and using networking, and the use of translators.

If you need help with a specific program, you can get a short explanation of the program's use by typing program --help at the command prompt; for example, to get help on grep, type grep --help. More complete documentation is available if you type info program; continuing with our example of grep, type info grep.

This manual attempts to provide an introduction to the essential topics with which a user of GNU/Hurd, or any free UNIX-like system. New users of GNU/Hurd and GNU/Linux must often spend time learning what skills they must learn; for example, users must learn what tools are important, and what sources of information are most useful. We introduce the reader to the skills and concepts that must be learnt, and tell the reader where to find further information.

An operating system kernel provides a framework for programs to share a computer's hardware resources securely and efficiently. This framework includes mechanisms for programs to communicate safely, even if they do not trust one another.

The GNU Hurd breaks up the work of the traditional kernel, and implements it in separate programs. The Hurd formally defines the communication protocols that each of the servers understands, so that it is possible for different servers to implement the same interface; for instance, NFS and FTP use the same TCP/IP communication protocol. NFS, FTP, and the TCP/IP servers are all separate user-space programs (don't worry if these programs are not familiar to you, you can learn about them if and when you need to).

The GNU C Library provides a POSIX environment on the Hurd, by translating standard POSIX system calls into interactions with the appropriate Hurd server.

POSIX stands for Portable Operating System Interface. The term POSIX we hear so much about is a set standards set by the IEEE. Unix-like operating systems aim to be compliant or partially-compliant. GNU/Hurd is as compliant as any other Unix-like operating system. That is why ported applications, implementations of protocols and other essential services have been available since the introduction of GNU/Hurd. But always remember: GNU's Not Unix. GNU/Hurd complies with POSIX, but is constantly growing to address the limitations of Unix-like operating systems.

Richard Stallman (RMS) started GNUin 1983, as a project to create a complete free operating system. In the text of the GNU Manifesto, he mentioned that there is a primitive kernel. In the first GNUsletter, Feb. 1986, he says that GNU's kernel is TRIX, which was developed at the Massachusetts Institute of Technology.

By December of 1986, the Free Software Foundation (FSF) had "started working on the changes needed to TRIX" [Gnusletter, Jan. 1987]. Shortly thereafter, the FSF began "negotiating with Professor Rashid of Carnegie-Mellon University about working with them on the development of the Mach kernel" [Gnusletter, June, 1987]. The text implies that the FSF wanted to use someone else's work, rather than have to fix TRIX.

In [Gnusletter, Feb. 1988], RMS was talking about taking Mach and putting the Berkeley Sprite filesystem on top of it, "after the parts of Berkeley Unix... have been replaced."

Six months later, the FSF is saying that "if we can't get Mach, we'll use TRIX or Berkeley's Sprite." Here, they present Sprite as a full-kernel option, rather than just a filesystem.

In January, 1990, they say "we aren't doing any kernel work. It does not make sense for us to start a kernel project now, when we still hope to use Mach" [Gnusletter, Jan. 1990]. Nothing significant occurs until 1991, when a more detailed plan is announced:

``We are still interested in a multi-process kernel running on top of

Mach. The CMU lawyers are currently deciding if they can release Mach

with distribution conditions that will enable us to distribute it. If

they decide to do so, then we will probably start work. CMU has

available under the same terms as Mach a single-server partial Unix

emulator named Poe; it is rather slow and provides minimal

functionality. We would probably begin by extending Poe to provide full

functionality. Later we hope to have a modular emulator divided into

multiple processes.'' [Gnusletter, Jan. 1991].

RMS explains the relationship between the Hurd and Linux in

http://www.gnu.org/software/hurd/hurd-and-linux.html, where he

mentions that the FSF started developing the Hurd in 1990. As of

[Gnusletter, Nov. 1991], the Hurd (running on Mach) is GNU's official

kernel.

These announcements made it clear that the GNU Project was getting a Mach microkernel as a component of the GNU System. Once Lites, a single-server 4.4BSD user land environment, had been implemented on top of Mach, Mach-based systems became usable. Members of the GNU Project then began hacking Mach for use with the GNU Project's multi-server kernel replacement. The individuals involved with making Mach work with the GNU Project's multi-server kernel replacement were Thomas Bushnell, BSG., and Roland McGrath. The Mach microkernel was originally developed at Carnegie Melon University (CMU); after development subsided at CMU, the University of Utah took over and continued adding better drivers and fixing other critical deficiencies.

In the latest GNUmach release notes, kernel and glibc maintainer Roland McGrath clarifies the development history of the GNUmach microkernel.

"When maintenance of Mach 3.0 at CMU waned, the University of

Utah's Flux Group took over in the form of the Mach4 project,

and revamped much of the i386 machine support code in the course

of their research. While at Columbia University, Shantanu Goel

worked on using Linux device drivers in Mach, and later continued

this work at the University of Utah. Utah Mach4 became the seat

of Mach development on the 3.0-compatible line, and the microkernel

underlying the GNU/Hurd multiserver operating system. When the Flux

Group's research moved on from Mach to other systems, they

wanted to reuse the work they had done in hardware support and device

drivers; this work (and a whole lot more) eventually evolved into the

OSKit. Meanwhile, when the Flux Group stopped maintaining Mach4,

the Free Software Foundation's GNU Hurd Project had taken it up and

produced the GNUmach release to go with the Hurd. Since then, the

Hurd has become an all-volunteer project whose developers are not

paid by the FSF, and later GNUmach releases incorporating bug fixes

and updating the Goel/Utah encapsulation of Linux device drivers have

been made by volunteers including Okuji Yoshinori and Thomas Bushnell.

This bit of history explains some of why it was so easy to replace a

lot of the GNUmach/Mach4 hardware support code with OSKit calls--in

many cases the OSKit code is a direct evolution of the code originally

in Mach4 that I was replacing, with the names changed and improvements

Utah has made since the Mach4 days."

Firstly, a note for end-users: if you do not consider yourself "computer literate," then you definitely will not want to use the Hurd. No official release of the Hurd has yet been made, and the system is currently unstable. If you run the Hurd, you will encounter many bugs. For those people who use their computers for web-surfing, email, word processing, etc., and just want the infernal machine to work, the Hurd's bugs would prove extremely annoying.

For such people, a more stable system is preferable. Fortunately, the GNU system currently exists in a very stable state with Linux substituted for the Hurd as the kernel, so it is possible for end-users to use a powerful, stable, and Free Unix-like operating system. Debian GNU/Linux, a very high-quality GNU/Linux distribution, is available; in addition, many commercial companies sell boxed GNU/Linux distributions with printed documentation, and, to various extents, take measures to hide the complexity of the system from the user. Keep in mind that the GNU/Hurd is not the system you use for web-surfing, email, word processing, and other such tasks now ... it is the system that you will use for these tasks in the future.

Those who consider themselves computer-literate and are interested in the Hurd, but do not have experience with Unix-like systems, may also wish to learn the ropes using a more stable GNU/Linux system. Unix-like systems are quite different from other systems you may have used, and they take some getting used to. If you plan to use the GNU/Hurd in the future, we recommend you use Debian GNU/Linux.

If you consider yourself computer-literate, but are not a programmer, you can still contribute to the Hurd project. Tasks for non-programmers include running GNU/Hurd systems and testing them for bugs, writing documentation, and translating existing documentation into other languages. At present, most GNU/Hurd documentation is available only in English and/or French.

Anyone who might be interested in the Hurd: a student studying the system, a programmer helping to develop the Hurd servers, or an end-user finding bugs or writing documentation, will be interested in how GNU/Hurd is similar to, and different from, Unix-like kernels.

For all intents and purposes, the Hurd is a modern Unix-like kernel, like Linux and the BSDs. GNU/Hurd uses the GNU C Library, whose development closely tracks standards such as ANSI/ISO, BSD, POSIX, Single Unix, SVID, and X/Open. Hence, most programs available on GNU/Linux and BSD systems will eventually be ported to run on GNU/Hurd systems.

An advantage of all these systems - GNU/Hurd, GNU/Linux, and the BSDs - is that, unlike many popular operating systems, is that they are Free Software. Anybody can use, modify, and redistribute these systems under the terms of the GNU General Public License (see GNU General Public License) in the case of GNU/Hurd and GNU/Linux, and the BSD license in the case of the BSDs. In fact, the entire GNU System is a complete Unix-like operating system licensed under the GNU GPL.

Although it is similar to other Free Unix-like kernel projects, the Hurd has the potential to be much more. Unlike these other projects, the Hurd has an object-oriented structure that allows it to evolve without compromising its design. This structure will help the Hurd undergo major redesign and modifications without having to be entirely rewritten. This extensibility makes the Hurd an attractive platform for learning how to become a kernel hacker or for implementing new ideas in kernel technology, as every part of the system is designed to be modified and extended. For example, the MS-DOS FAT filesysetem was not supported by GNU/Hurd until a developer wrote a translator that allows us to access this filesystem. In a standard Unix-like environment, such a feature would be put into the kernel. In GNU/Hurd, this is done in a different manner and recompiling the kernel is not necessary, since the filesystem is implemented as a user-space program.

Scalability has traditionally been very difficult to achieve in Unix-like systems. Many computer applications in both science and business require support for symmetric multiprocessing (SMP). At the time of this writing, Linux could scale to a maximum of 8 processors. By contrast, the Hurd implementation is aggressively multi-threaded so that it runs efficiently on both single processors and symmetric multiprocessors. The Hurd interfaces are designed to allow transparent network clusters (collectives), although this feature has not yet been implemented.

Of course, the Hurd (currently) has its limitations. Many of these limitations are due to GNU Mach, the microkernel on which the Hurd runs. For example, although the Hurd has the potential to be a great platform for SMP, no such multiprocessing is currently possible, since GNU Mach has no support for SMP. The Hurd has supports less hardware than current versions of Linux, since GNU Mach uses the hardware drivers from version 2.0 of the Linux kernel. Finally, GNU Mach is a very slow microkernel, and contributes to the overall slowness of GNU/Hurd systems.

Mach is known as a 1st-generation microkernel - much work has gone into the much-improved 2nd-generation microkernels currently being developed. A long-term goal is to port the Hurd to L4, a very fast 2nd-generation microkernel. This port will offer substantial improvements to the system. In the short term, the Hurd developers plan to move the Hurd to OSKit Mach, an improved version of Mach being developed at the University of Utah.

The Hurd is still under active development, and no stable release has been made. This means that the Hurd's code base is much less mature than that of Linux or the BSDs. There are bugs in system that are still being found and fixed. Also, many features, such as a DHCP client, and support for several filesystem types, is currently missing.

The deficiencies in the Hurd are constantly being addressed; for example, until recently, pthreads (POSIX threads), were missing. This meant that several major applications, including GNOME, KDE, and Mozilla, could not run on GNU/Hurd. Now that the Hurd has a preliminary pthreads implementation, we may soon see these applications running on GNU/Hurd systems.

The Hurd is a very modern design. It is more modern than Linux or the BSDs, because it uses a microkernel instead of a monolithic kernel. It is also more modern than Apple's Darwin or Microsoft's NT microkernel, since the it has a multi-sever design, as opposed to the single-server design of Darwin and NT. This makes the Hurd an ideal platform for students interested in operating systems, since its design closely matches the recommendations of current operating system theory. In addition, the Hurd's modular nature makes it much easier to understand than many other kernel projects.

GNU/Hurd is also an excellent system for developers interested in kernel hacking. Whereas Linux and the BSDs are quite stable, there is still much work to be done on the Hurd; for example, among all the filesystem types in use today, the Hurd only supports ext2fs (the Linux filesystem), ufs (the BSD filesystem), and iso9660fs (the CD filesystem). GNU/Hurd offers a developer the opportunity to make a substantial contribution to a system at a relatively-early point in its development.

The GNU operating system is alive and well today. In 1998 Marcus Brinkmann brought the Hurd to the Debian Project. This allowed GNU/Hurd to have a software management system(apt and dpkg), world wide access to the latest free software, and a extreme increase of popularity.

The original architect, Thomas Bushnell, BSG, is involved on the mailing lists helping the young hackers design and help with Hurd development. There is now a team of individuals from around the world. They are constantly giving talks at conferences about the importance of design and implementation of the Hurd's unique multiserver microkernel environment. There is also work on porting the Hurd to two other micro-kernels and another architecture.

Debian GNU/Hurd has been Debian's most active non-Linux port. The user applications that are ported to GNU/Hurd are very current and are actively being improved. Development of GNU/Hurd within the Debian project has also contributed to incremental development releases on sets of CDs. Also, new developers are allowed to get the source code to the Hurd from anywhere on the planet via Debian's packaging tool apt, and can get an even-more recent snapshot release from the the GNU project's alpha.gnu.org server.

As far as a multi-server microkernel based operating systems are concerned: GNU/Hurd has had it's share of critisim. Many people have criticized the Hurd for its lack of a stable release, its design, which is unfamiliar to those used to monolithic kernels such as Linux and the BSD kernels, and its connection to the strong ideals of the GNU Project; however, GNU/Hurd is a cutting-edge operating system. Other operating systems with designs similar to GNU/Hurd are QNX and Sawmill. QNX is meant for embedded systems and many of the GNU Projects most famous programs are available for it . Sawmill was a research project set up by IBM. It was never released outside the academic community. Sawmill is a version of the Linux kernel running as multi-server on top of the L4 microkernel. It used some of the same ideas as GNU/Hurd but with members of the L4 Project influencing some of there research in microkernel based technology.

The Hurd is distributed under the terms of the GNU General Public License (see GNU General Public License). The GNU GPL protects your right to use, modify, and distribute all parts of the GNU system.

We often show command prompts in this manual. When doing so, the command prompt for the root user (System Administrator) includes a '#' character, and the command prompt for a regular user includes a '$' character. For example, if we were discussing how to shut down the system, which only the root user may do, the prompt may look like this:

# halt <ENTER>

When discussing how to list the contents of a directory, which regular users can do, provided they have read permission for the directory (more on this later), the prompt may appear as:

$ ls <ENTER>

Prompts may include additional characters, for example:

bash-2.05# mke2fs -o hurd /dev/hda3 <ENTER>

Hyperlinks in this manual look like this: http://www.gnu.org/.

A definiton is typeset as shown in this sentence.

There is a footnote at the end of this sentence. 1

The names of commands are appear like this: info. Files are

indicated like this: README.txt.

This document includes cross-references, such as this one to the See GNU General Public License, and this parenthetical one to the see GNU General Public License.

Before you can use GNU/Hurd on your favorite machine, you'll need to install its base software components. Currently, the Hurd only runs on Intel i386-compatible architectures (such as the Pentium), using the GNU Mach microkernel.

If you have unsupported hardware or a different microkernel, you will not be able to run the Hurd until all the required software has been ported to your architecture. Porting is an involved process which requires considerable programming skills, and is not recommended for the faint-of-heart. If you have the talent and desire to do a port, contact bug-hurd@gnu.org in order to coordinate the effort.

By far the easiest and best way to install GNU/Hurd is to obtain a Debian GNU/Hurd binary distribution. Even if you plan on recompiling the Hurd itself, it is best to start off with an already-working GNU system so that you can avoid having to reboot every time you want to test a program.

You can get GNU from a friend under the conditions allowed by the GNU GPL (see GNU General Public License). Please consider sending a donation to the Free Software Foundation so that we can continue to improve GNU software.

You can order Debian GNU/Hurd on a CD-ROM from CopyLeft or you can also FTP the complete GNU system in iso format from your closest GNU mirror,or ftp://ftp.gnu.org/iso. Again, please consider donating to the Free Software Foundation.

The format of the binary distribution is prone to change, so this manual

does not describe the details of how to install GNU. The README

file distributed with the binary distribution gives you complete

instructions.

After you follow all the appropriate instructions, you will have a working GNU/Hurd system. If you have used GNU/Linux systems or other Unix-like systems before, GNU/Hurd will look quite familiar. You should play with it for a while, referring to this manual only when you want to learn more about GNU/Hurd.

If GNU/Hurd is your first introduction to the GNU operating system, then you will need to learn more about GNU in order to be able to use it. This manual will provide the basics for you to expand on and for you to productively use your GNU/Hurd machine. You should talk to your friends who are familiar with GNU, in order to find out about classes, online tutorials, or books which can help you learn more about GNU.

If you have no friends who are already using GNU, you can find some useful starting points at the GNU web site, http://www.gnu.org/. You can also send e-mail to help-hurd@gnu.org, to contact fellow Hurd users. You can join this mailing list by sending a request to help-hurd-request@gnu.org. You may even want to chat with the developers yourself. You can reach some on irc.freenode.net in the #hurd channel.

A great way to install the Hurd is to use an existing GNU/Linux operating system. This is the easiest method I have ever seen at installing a working system. What you need is:

ftp.debian.org> and

<alpha.gnu.org>).

crosshurd-#.##.deb)

Using your GNU/Linux system, you need to make a 1 gb partition with either fdisk or cfdisk. Then you have to format it for the Hurd. Let's say I made a partition called /dev/hda2 I would format it this way:

bash-2.05# mke2fs -o hurd /dev/hda2 <ENTER>

After this is done you can mount the partition. You then need to install the

crosshurd package from your nearest Debian mirror. When you run the crosshurd

program it will ask you where your GNU/Hurd partition is mounted. In this

example, we mount the partition on /gnu, but you can mount your

partition anywhere you choose. You will need to run crosshurd after you mount

your filesystem. The install program will ask you if you'd like to make

/usr a link to . (the single period is a shortcut for the

current directory, which is the root directory on GNU/Hurd). It is a good

idea to say yes to this option. GNU/Hurd does not require a /usr

partition like traditional Unix-like systems.

you must be root user for this to work

bash-2.05# apt-get update <ENTER>

bash-2.05# apt-get install crosshurd <ENTER>

bash-2.05# mount /dev/hda2 /gnu <ENTER>

bash-2.05# crosshurd <ENTER>

After your done you should be able to reboot. You must use the

GRUB boot disk to boot the Hurd. Once you've booted, you

can finish the installation by running the native-install

shell script.

Note: When you are booting the Hurd for the first time, you must give GRUB the single-user option (-s), as in the following example. Also note that the first long module entry is all on one line.

title GNU/Hurd

root (hd0,1)

kernel /boot/gnumach.gz -s root=device:hd0s2

module /hurd/ext2fs.static --multiboot-command-line=${kernel-command-line} --host-priv-port=${host-port} --device-master-port=${device-port} --exec-server-task=${exec-task} -T typed ${root} $(task-create) $(task-resume)

module /lib/ld.so.1 /hurd/exec $(exec-task=task-create)

When you have booted the Hurd you will be at a single user shell prompt.

You should be able to type native-install and see it set up your

translators and install some essential system software.

sh-2.05#./native-install <ENTER>

When this is done, you will be prompted to reboot and run

native-install again. The second round of native-install

should configure your system for use. After this, you must do some important

things.

You will need to make your swap, home (optional), and cdrom device translators.

You will need to cd into your /dev directory and run the

MAKEDEV script. After these devices are created, you will need to

edit the file /etc/fstab and add your swap device and optionally

your cdrom. Before you can add the devices to that file, you need to

set up your temporary terminal type. GNU/Hurd's default editor is called

nano; it is a small lightweight simple editor.

sh-2.05# cd /dev <ENTER>

sh-2.05# ./MAKEDEV hd0s3 hd0s4 hd2 <ENTER>

sh-2.05# export TERM=mach <ENTER>

sh-2.05# nano /etc/fstab <ENTER>

The last command will open up the file fstab to be edited. You should

see an entry for your root (/) filesystem. A entry for your swap

partition should be added to this file like in

this example:

device mount point filesystem type options dump pass

/dev/hd0s2 / ext2fs rw 1 1

/dev/hd0s3 none swap sw 0 0

/dev/hd0s4 /home ext2fs rw 0 0

/dev/hd2 /cdrom iso9660fs ro,noauto 0 0

Note: You can use the same swap partition from your GNU/Linux system.

After editing this file and creating your devices, you should be able to reboot into a multi-user GNU/Hurd system.

Bootstrapping2 is the procedure by which your machine loads the microkernel and transfers control to the Hurd servers.

The bootloader is the first piece of software that runs on your machine. Many hardware architectures have a very simple startup routine which reads a very simple bootloader from the beginning of the internal hard disk, then transfers control to it. Other architectures have startup routines which are able to understand more of the contents of the hard disk, and directly start a more advanced bootloader.

Currently, GRUB3 is the GNU bootloader. GNU GRUB provides advanced functionality, and is capable of loading several different kernels (such as Linux, the *BSD family, and DOS).

From the standpoint of the Hurd, the bootloader is just a mechanism to get the microkernel running and transfer control to the Hurd servers. You will need to refer to your bootloader and microkernel documentation for more information about the details of this process. However, you don't need to know how this all works in order to use GNU.

At the moment, you are booting the Hurd with a floppy disk. Doing so regularly is not a good idea, since floppy disks are prone to failure. This section will show you how to install GRUB into the master boot record of your hard-disk.

What you need are:

First you will need to make a directory for GRUB. Do this now (you must be logged in as root).

bash-2.05# mkdir /boot/grub

Now you must copy the contents of the GRUB installed loader files. These are usually located in /lib/grub/i386-unknown-pc/. All the files in this directory need to be copied to the boot directory.

bash-2.05# cp /lib/grub/i386-unknown-pc/* /boot/grub/

Now, you have two more things to do. You must construct a menu.lst

file to put in your /boot/grub directory. This file is the file that

displays a menu at boot time. Before you tackle this, you should read

the GRUB info pages to get a better understanding of the way GRUB works.

The reason I ask this of you is that the Hurd is constantly being worked

on to boot more effiecently and the syntax may change with the Hurd's

development releases.

This is my sample menu.lst file

timeout 10

color light-grey/black red/light-grey

title Debian GNU/Hurd

root (hd0,0)

kernel /boot/gnumach.gz root=device:hd0s1

module /hurd/ext2fs.static --multiboot-command-line=${kernel-command-line} --host-priv-port=${host-port} --device-master-port=${device-port} --exec-server-task=${exec-task} -T typed ${root} $(task-create) $(task-resume)

module /lib/ld.so.1 /hurd/exec $(exec-task=task-create)

title Debian GNU/Linux

root (hd0,1)

kernel /vmlinuz root=/dev/hda2 ro

Now that you have your menu.lst file in /boot/grub,

you are ready to install GRUB and make your machine bootable

from the hard disk. You should write down what you wrote in your

menu.lst file. You must type this information

into the GRUB command line. If you forget this information, your

GRUB boot floppy should be able to boot your system so that you

can write the relevant data down and continue with the installation

of GRUB.

When the boot menu of your GRUB floppy appears, press <c> on

your keyboard. This will put you at the GRUB command prompt.

You need to start with the root command:

:grub> root (hd0,0)

The root command in the above example tells

GRUB that my GNU/Hurd system is installed on the first partition

of the first drive. After you press the <Enter> key, GRUB

should print out something along the lines as <ext2 and a

bunch of numbers>. This means that GRUB found your root filesytem.

Next you need GRUB to load the kernel. This is the tricky part.

Heres what I have:

:grub> kernel /boot/gnumach.gz root=device:hd0s1

Once you press <Enter> again, you will see some more info. If you get an error, that just means you made a typing mistake, or picked the wrong root device for GRUB. There is no need to work: this will not make your machine explode.

Next, you need to enter the modules for GRUB to use. This is probably the trickiest part of booting the system.

:grub> module /hurd/ext2fs.static --multiboot-command-line=${kernel-command-line} --host-priv-port=${host-port} --device-master-port=${device-port} --exec-server-task=${exec-task} -T typed ${root} $(task-create) $(task-resume)

Note this should be all on one line, do not press <Enter> until the end.

Next, type:

:grub> module /lib/ld.so.1 /hurd/exec $(exec-task=task-create)

That first monster module line is a lot to type each time you want to

boot GNU/Hurd. We can save ourselves a lot of time and effort by

putting this information, along with all other relevant information, in

the menu.lst file, as shown in the example above.

So, we open nano, type something similar to the example menu.lst

file shown above, reboot the system, and .... the system freezes

on boot.

What went wrong?

The answer is that nano automatically inserts line breaks at the end of long lines, so our big monster module line is broken up into a form that GRUB cannot understand.

There are two easy ways to solve this problem. First, you could create

your menu.lst file using another editor, such as GNU Emacs,

that does not automatically insert line breaks. Another example is

to use the <\> character, which tells GRUB to read the next line

as if it were part of the current line; you could type, for example:

module /hurd/ext2fs.static \

--multiboot-command-line=${kernel-command-line} \

--host-priv-port=${host-port} --device-master-port=${device-port} \

--exec-server-task=${exec-task} \

-T typed ${root} $(task-create) $(task-resume)

GRUB would then interpret these five lines as one long line.

To fully understand all our module commands, you can check the Hurd Reference Manual, which should explain every detail. These are very important for developers, as these commands can do many interesting things with the GRUB bootloader and the Hurd. For instance the newest gnumach microkernel can use GRUB to debug it through the serial port on your computer. GRUB can also boot your kernel and then fetch the rest of your system over the network. This is partially implemented with GNU/Hurd but older Unix-like operating systems can do this easily.

If you're still with me, then you should be able to safely install GRUB on your hard disk. If you're confused, then you should consult the GRUB documentation. You should be able to install GRUB by typing the following command at your GRUB command prompt:

:grub> setup (hd0)

If this is successful, you should be able to press the reset button on your machine's case and remove the GRUB floppy. You just installed GRUB to the master boot record of the hard disk! That's one task many people are scared to attempt.

The serverboot program has been deprecated. Newer GNU Mach

kernels support processing the bootscript parameters and boot

the Hurd directly.

The serverboot program is responsible for loading and executing

the rest of the Hurd servers. Rather than containing specific

instructions for starting the Hurd, it follows general steps given in a

user-supplied boot script.

To boot the Hurd using serverboot, the microkernel must start

serverboot as its first task, and pass it appropriate arguments.

serverboot has a counterpart, called boot, which can be invoked

while the Hurd is already running, and allows users to start their own complete

sub-Hurds (see The Sub-Hurd).

serverbootThe serverboot program has the following synopsis:

serverboot -switch... [[host-port device-port] root-name]

Each switch is a single character, out of the following set:

a

d

q

:/boot/servers.boot.

All the switches are put into the ${boot-args} script

variable.

host-port and device-port are integers which represent the

microkernel host and device ports, respectively (and are used to

initialize the ${host-port} and ${device-port} boot

script variables). If these ports are not specified, then

serverboot assumes that the Hurd is already running, and fetches

the current ports from the procserver, which is documented in the

GNU Hurd Reference Manual.

root-name is the name of the microkernel device that should be

used as the Hurd bootstrap filesystem. serverboot uses this name

to locate the boot script (described above), and to initialize the

${root-device} script variable.

Boot Scripts are used to boot further Hurd systems in parallel to the

first, and are parsed by serverboot to boot the Hurd. See

/boot/servers.boot for an example of a Hurd boot script.

The boot program can be used to start a set of core Hurd servers

while another Hurd is already running. You will rarely need to do this,

and it requires superuser privileges to control the new Hurd (or allow

it to access certain devices), but it is interesting to note that it can

be done.

Usually, you would make changes to only one server, and simply tell your programs to use it in order to test out your changes. This process can be applied even to the core servers. However, some changes have far-reaching effects, and so it is nice to be able to test those effects without having to reboot the machine.

Here are the steps you can follow to test out a new set of servers:

boot understands the regular

libstore options (FIXME xref), so you may use a file or other

store instead of a partition.

$ dd if=/dev/zero of=my-partition bs=1024k count=400

400+0 records in

400+0 records out

$ mke2fs ./my-partition

mke2fs 1.18, 11-Nov-1999 for EXT2 FS 0.5b, 95/08/09

my-partition is not a block special device.

Proceed anyway? (y,n) y

Filesystem label=

OS type: GNU/Hurd

Block size=1024 (log=0)

Fragment size=1024 (log=0)

102400 inodes, 409600 blocks

20480 blocks (5.00%) reserved for the super user

First data block=1

50 block groups

8192 blocks per group, 8192 fragments per group

2048 inodes per group

Superblock backups stored on blocks:

8193, 24577, 40961, 57345, 73729, 204801, 221185, 401409

Writing inode tables: done

Writing superblocks and filesystem accounting information: done

$

$ settrans -c ./my-root /hurd/ext2fs -r `pwd`/my-partition

$ fsysopts ./my-root --writable

$ cd my-root

$ tar -zxpf /pub/debian/FIXME/gnu-20000929.tar.gz

$ cd ..

$ fsysopts ./my-root --readonly

$

boot.

-$ boot -D ./my-boot ./my-boot/boot/servers.boot ./my-partition

[...]

/dev/hd2s1:

$ settrans /mnt /hurd/ex2fs --readonly /dev/hd2s1

$ boot -d -D /mnt -I /mnt/boot/servers.boot /dev/hd2s1

Note that it is impossible to share microkernel devices between the two

running Hurds, so don't confuse your sub-Hurd with your main GNU/Hurd

system. When you're finished testing your new Hurd, then you

can run the halt or reboot programs to return

control to the parent Hurd.

If you're satisfied with your new Hurd, you can arrange for your bootloader to start it, and then reboot your machine. You will then be in a safe place to overwrite your old Hurd with the new one, and reboot back to your old configuration (with the new Hurd servers).

Usage: boot [option...] boot-script device...

--kernel-command-line=command line-c

--pause

-d

--boot-root=dir-D

--interleave=blocks--isig

-I

--layer

-L

--single-user

-s

--store-type=type-T

Mandatory or optional arguments to long options are also mandatory or optional

for any corresponding short options.

If neither --interleave or --layer is specified, multiple

devices are concatenated.

You can shut down or reboot your GNU/Hurd machine by typing these commands:

bash-2.05# reboot <ENTER>

bash-2.05# halt <ENTER>

In a matter of seconds it should tell you that it is in a type loop and

pressing <ctrl>, <alt>, and <Delete> at the same time will

make the system reboot. It is now safe to shut the computer off.

Now that you have GNU/Hurd installed and booting, you're probably wondering "What can I do with it?" Well, GNU/Hurd is capable of being a console based workstation. The X Window System is known to work, but is not required in order to learn how to use GNU/Hurd. This chapter is intended to get you acquainted to the command line of GNU/Hurd. You should read the Hurd FAQ and also have a working knowledge of Bash, the GNU shell. If you are not familiar with this material, you can read see The Shell for a brief introduction.

If you successfully installed GNU/Hurd and you are in multi-user mode, you should have a login shell ready for you, which should look like this:

GNU 0.3 (hurd)

Most of the programs included with the Debian GNU/Hurd system are

freely redistributable; the exact distribution terms for each program

are described in the individual files in /usr/share/doc/*/copyright

Debian GNU/Hurd comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

login>

Type Login:USER or type HELP

You can go ahead and type login root<<ENTER>> to get started.

What you need to know for using GNU/Hurd is your basic Unix-like commands. The GNU Project has written a replacement for almost every Unix command. You need to learn how to copy, move, view, and modify files. It also is important to learn one of the many editors that are available for GNU/Hurd. The default editor is Nano, which is very simple and self explanatory yet not as powerful as Emacs or vi. It is there because it's small, and easy on the memory of your computer. I am not going to cover it here because it is quite self-explanatory. This section hopes to get you started with these necessity commands. If you have knowledge of these already, you can probably skip ahead. Also, you might want to get comfortable with the default editor first, then come back to this section to learn your basic GNU/Hurd shell commands.

The shell is the the program that interacts with the filesystem and the data on the filesystem. This is called bash (Bourne again shell)4. Once you get comfortable with the shell, you can happily sit at any Unix-like workstation and get some work done. It is very important that you get comfortable with the shell. It is also called the terminal, console, etc. At this prompt you should be ready to begin. (Note: Shell variables cause your prompt to display your current working directory. Your prompt may look like this: "user@hurd:~/$".)

bash-2.05$

To make a directory for yourself, you would use the mkdir

(make directory)

command. You can make as many as you want as long as they are in your home

directory.

You may notice that many commands are abbreviations. The tradition of Unix-like systems dictates that commands take this form. Since the user is expected to type many commands into the shell, the commands are kept succinct so that fewer keystrokes are required. The side effect, of course, is many command names that are confusing at first sight.

bash-2.05$ mkdir docs tmp sources <ENTER>

To change directories you would use the cd (change directory)

command.

One thing to remember is that wherever you are in the filesytem, typing

cd

alone will put you back in your home directory. (Note:The bash shell

features command line completion, by pressing the first letter of the file

or directory plus <<TAB>> will do this.)

bash-2.05$ cd /usr/local <ENTER> #this puts you in the /usr/local directory.

bash-2.05$ cd <ENTER> #this puts you back in the home directory.

There is another short cut, cd ../ This puts you in the directory

above the one

you're currently in.

There are many ways to view a file, but we'll use the most common command

called cat 5.

bash-2.05$ cat README | less <ENTER>

You may wonder what | less means. We use the | (pipe symbol) to take

the output of README and feed it to the less command.

less allows you to view

the documents one screen at a time and it allows you to go back to the

beginning of the document. You have to type <q> to quit less.

To copy a file from one place to another you use the cp (copy)

command. The cp command has many options such as

cp -f -v -r.

Luckily for us, the info

command will clarify many of the confusing commands and all three options.

Typing,

bash-2.05$ cp README chapter1.txt foo1/ <ENTER>

will copy README and chapter1.txt to the foo1 directory.

Here are a few good options to remember:

bash-2.05$ cp -rfv foo1/ file2.txt tmp/ <ENTER>

bash-2.05$ cp -rf /cdrom/* ~/ <ENTER>

The -r means "recursive", so file2.txt, the foo1

directory,

and all the contents of foo1 are copied to the tmp directory.

The -f means "force", I have a habit of forcing things.

The -v means "verbose", It will show you on the screen what files are

being

copied. In the second example, we have this ~/, which is a short cut

representing

your home directory. So that command would copy the contents of /cdrom

to

your home directory.(Note:The asterik symbol '*' is called a wildcard

character which means "anything".)

To rename or move a file or directory you would use the mv (move)

command.

Be careful, because mv is a very powerful command. You can lose

data if

you're not careful about what you do. To rename a file or directory you

would type, for example:

bash-2.05$ mv foo1/ foo2 <ENTER>

Now you no longer have a foo1 directory, it's called foo2 now.

The same thing can be done with plain files, too.

I'll bet you're saying, "I'm doing all this work, but how do I view my

directories?"

You answer would be the ls (list)command. The ls command

also

has many options.

bash-2.05$ ls -al <ENTER>

The two options I have here are used more often than any others. The

-a

will show us all hidden files in the current directory.6 The

-l option tells us to display the directory in long format.

This means it shows us the date modified, file size, file attributes, etc. of

all

files.

A user will also notice that there are files with dots in front of some files in thier home directory. The dotted files are typically hidden configuration files. The user will also notice a dot, followed by two dots. In the unix file system a dot represents the current directory. The two dots represent the directory above your current working directory. Examples of using these dots are common in building software.

bash-2.05# ../configure # This runs configure from the directory above.

bash-2.05# ./program # This runs program from current directory.

Now that you have made all this mess in your home directory, I'll bet you're

wondering how you can get rid of things. There are two important deleting

commands. The rm (remove) command is convienent for removing files.

rm command can also remove directorys that the rmdir

command

can't. I'll show the you a basic rm example, then my favorite with

the extra options. The rmdir command will only delete directories

that are empty.

bash-2.05$ rm README <ENTER>

Now, rm with some options:

bash-2.05$ rm -rf foo2/ <ENTER>

The second example is very powerful. The -r means "recursive" and

the

-f means "force." This removes all the contents of foo2, without

prompting the user for confirmation.

These are only the basic commands to get you started... there are many, many two- and three-letter commands. The more you use GNU/Hurd, the more options and commands you'll learn. The more you learn, the more freedom you'll have.

And that is a very valuable thing.

Now that you have been cruising around your filesystem, you might be wondering how much space you have left on your drive. There is a simple command to check this:

bash-2.05$ df / <ENTER>

Filesystem 1k-blocks Used Available Use% Mounted on

/dev/hd0s1 1920748 1203996 619180 67% /

If you don't put a directory after the command such as / or

/home,

the df (diskfree) command will spit out some errors.

If your prompt is not telling you what directory you are in, you can use the

pwd (present working directory) command:

bash-2.05$ pwd <ENTER>

/usr/src

As a final note, always remember: info is your friend on

GNU/Hurd.

Computers borrow many concepts from mathematics. One very important concept that will be familiar to those with a background in programming is the idea of a function. Informally, the term function encapsulates the following idea:

isdigit(): you give it

a character, and it returns 1 if the character is a digit, and 0

otherwise.

There are many more examples of functions. Notice from our examples that a function may have multiple inputs an outputs; however, a function must always produce the same output for any given set of inputs. It would not do for a drink machine to sometimes give root beer when asked for iced tea!

In GNU systems and other systems that follow the UNIX tradition, programs are functions. Every program in a GNU system has a standard input and a standard output.

By default, a program's standard input comes from the command line, and its output goes to the terminal. For example:

$ echo "Echo prints whatever you type."

Echo prints whatever you type.

We may redirect a program's standard output using the <>> key:

$ echo "Here's a quick way to write one-line files." > quickie.txt

$ ls

quickie.txt

$ cat quickie.txt

Here's a quick way to write one-line files.

$ ls quickie.txt > dir.txt

$ ls

quickie.txt dir.txt

$ cat dir.txt

quickie.txt

Similarly, we can use the <<> key to redirect a program's standard input:

$ cat < dir.txt

quickie.txt

We can use <>> and <<> together:

$ cat < dir.txt > copy.txt

$ cat copy.txt

quickie.txt

Another property of functions that is useful to us is composition: we

can make the output of one function the input of another. When we use

<|>, the pipe symbol, we are composing two functions; see

See The Shell. For example, we can pipe the output of the info

program into less, and scroll through a page:

$ info gcc | less

Understanding standard input and output, redirection, and pipes is fundamental to intermediate and advanced use of the GNU command shell. Keep this section in mind while reading the rest of this manual.

Your computer is full of files. Some of these files are your personal files;

others, such as /etc/passwd, are used by the system. You may often

want to find certain files on your computer, or find specific information in

one or more files. This searching could be done manually through many

invocations of cd, ls, and less; however,

repetitive tasks such as searching are the sort of jobs for which computers

were invented, and your GNU system has several tools to make searching

easier.

The executables on your system are easy to find; simply use the

which command:

$ which ls

/usr/bin/ls

The which command is especially useful when you have multiple

copies of a program, each at a different location in your filesystem, since

it allows you to find out which is executed by default. For example, if I

install one version of Mozilla using Debian's apt-get command, and

another version by downloading the source from http://www.mozilla.org

and compiling it, I can type which mozilla to find out which is

executed by default.

Your GNU system's more general searching tools are:

locate

find

grep

xargs

Of all these programs, locate is the simplest. locate

searches through a database for files whose names match the pattern passed

to it on the command line. For example, typing locate bash would

print out a list of all files for which "bash" is part of the file's

name.

Since locate searches through a database instead of an actual

filesystem, it runs very quickly. Building this database, however, takes a

relatively-long time. The superuser (root) can rebuild locate's

database by typing updatedb; if you try to use the locate,

and it gives an error message mentioning the databse, you probably have to

run updatedb.

The find command is significantly more flexible than

locate, and therefore more complicated to use. Since find

searches through the filesystem instead of a database, it finds files more

slowly than locate does. find can find files based on

such properties as file name, size, type, and owner, or any combination of

these attributes.

When invoking find, you must tell it where to begin searching, and

what criteria to use. For example, to find all files whose names begin

will the letter "b" in the current directory and all its subdirectories,

you would type:

$ find . -name 'b*'

If my username is "tom" and I want to find all files in /tmp that

belong to me, I would type:

$ find /tmp -user tom

/tmp/.X11-unix

/tmp/cvs610c01f0.1

/tmp/cvs610c0d70.1

/tmp/cvs610c8570.1

/tmp/XWinrl.log

I can also use conditions in combination; for example, I can search for files

in /tmp that belong to me and begin with the string "cvs":

$ find /tmp -user tom -name 'cvs*'

/tmp/cvs610c01f0.1

/tmp/cvs610c0d70.1

/tmp/cvs610c8570.1

We used the find command to find files matching given criteria.

To search the contents of one or more files, we can use the grep

command.7 grep is like a flexible, command

line version of the Search or Find dialogue boxes common in modern GUIs.

The most basic way to use grep is to pass it a string, and a file

in which to search for the string:

$ grep 'microkernel' faq.en.html

<p>When we are referring to the microkernel, we say ``Mach'' and use it

microkernel'' instead of just ``Mach''.

<p>{NHW} If you are using the GNU Mach microkernel, you can set your

We can see that grep outputs the lines of the file

faq.en.html that contained the string "microkernel".

We can also use a pipe to make grep search for a string in the

output of another program:

$ echo "Mach is a microkernel" | grep 'microkernel'

Mach is a microkernel

The xargs command executes a command on each file in a list of files

read from its standard input. Most often, we redirect a list of files to

xargs. A very simple example would be:

$ ls | xargs cat

This command would print the contents of all files in the current directory to the screen (do not do this on a directory containing many large files!).

A more useful application of the xargs command comes from combining

it with grep to search for files based on their contents. For

example, we can type:

$ ls | xargs grep 'microkernel'

to search the current directory for files that contain the word

"microkernel". We can do more sophisticated searches by combining

find with xargs and grep; for details, see

Contents.

Note that in modern desktop environments such as GNOME, GUIs exist that let you search for files much more easily. For the majority of users, these tools are sufficient. The advantage of the command line-based tools discussed in this section is that their output can be redirected, and they can be used in shell scripts (see Introduction to Scripting with Bash).

For more information on searching your computer, see Overview.

These next few paragraphs are to aquaint the user of GNU/Hurd with extended shell capabilities. The bash shell is a very powerful program. You may have seen the term "Shell Programming", well that is what Bash can be used for. The shell can be used to write scripts to automate a handful of commands into one file. The start of a typical shell script will look like this:

#!/bin/bash

# The above line has to be the very first line of your

# script. If it's not it is taken as a comment.

#

# This is a comment

uname -a ; df /

echo This is a shell script

uptime &&

cat /etc/fstab

The very first line is a interesting one. The #!/bin/bash

is to notify the shell of what program to use to run the script.

The next two lines are comments, the '#' character is used to allow

us to type important information that the script won't execute.

Finally we get to the command uname -a which will tell

us the operating system we are using, with the -a option it tells

us everything we need. Following the first command is the ';' character

which tells bash there is a compound command. The semicolon will

tell bash to execute the command on the left first, then the right.

The '&&' symbols after "uptime" in the above script tell bash not to

execute the next command until the uptime command is complete. The

difference between the ";" and the "&&" is the first character will

spit out a error and then continue to the next command.

In order to execute the above script the file

attributes need to be changed to an executable format. To change

file attributes you use chmod 755. The chmod

command is pretty tricky, so read the info and/or man pages to

fully understand the capabilities of chmod.

Bash has other time saving features, for instance the export.

You can export a couple of letters to represent a full path to a

directory. The export command is typically used for this, here is a

example:

bash-2.05$export SRC=/home/src

bash-2.05#echo $SRC

/home/src

In the above example we use the echo to tell us what the

value of 'SRC' is. Without the '$' character the echo

would just print out 'SRC'. The echo is used extensively

in shell scripts to place text on our screen either for debugging

purposes or to prompt the user for input.

The great thing about shell scripts is that they have the capability of doing things just as quickly as a compiled program. A compiled program is written, compiled, and then run. The benefit of using a script is it is written then interpeted by the shell. When scripts get too large or complicated they are sometimes re-written in a compiled language. The reasoning behind this is that the shell script can slow the machine down the larger it gets, and take away resources that could be better used elsewhere on the computer. To get a better understanding of "Shell Programming" there are numerous books and on-line documents to be used.

Your GNU/Hurd workstations is comprised of many shell scripts. For instance when you login to the shell a couple scripts set up your enviroment for you. The scripts .profile and .bashrc set things such as

As you get more comfortable with you GNU/Hurd workstation you will end up customizing some of these values to suit your needs.

In this section, I want you to learn some extra things that will make life interesting. You will need to learn about archives. Archives come in many different formats. Archive is a general term for a software package. You may have heard of a tarball: this is a form of archive. The file extensions tell us what type of archive the file is. Generally they come in forms of .tgz, .tar.gz, .tar.bz2, .gz, etc.

Archiving is a method of packaging files. It has been around

since the early days of computing. The tar command that we use

when working

with archives stands for "tape archiver". It was originally used to create

archived

files for backup on large tape drives.

Closely associated with archiving is the concept of compressing. Various

algorithms

can be used to pack data into a smaller format. Archives are often compressed

before being

distributed; for example, a tarball may have the extension .tar.gz,

which means that

the tarball is a tar archive that has been compressed using

gzip. Most software

that you try and install from source-code will be packaged this way. I will

try to give you

some examples; if you're confused, always consult the info pages.

Let's say we get a tar file that has some documents you want to read.

Make

sure that you are in your home directory, then type:

bash-2.05$ tar xv docs.tar <ENTER>

After running this command, you would see the contents of the tar file

extracted

to your home directory. The x stands for "extract" and the v

means "verbose", which shows us the contents of the archive as it's being

decompressed. Compressing a file or directory is very similar. You would use

type:

bash-2.05$ tar -cv new.tar foo1/ foo2/ <ENTER>

The -c is to create the tar file. We add the name of the tar file,

then the

contents that we want. In the above example, we created a file called

new.tar

and we added the directories foo1/ and foo2/ to the tar file.

With

the -v option we see what is being added.

The gzip compression format is commonly used in the GNU system.

Files with

the extionsion .gz are associated with gzip. The utilities

tar and gzip work so well together that people

have patched the tar command so that it can compress and decompress

gziped

files. Note, however, that tar cannot be used to decompress

gziped files that are not tar archives.

bash-2.05$ tar -zxv file.tar.gz <ENTER> #This file was compressed once with

tar

then with gzip

bash-2.05$ gzip -d file.gz <ENTER> #notice no tar in filename means you

use

gzip

In the first example, the z after the tar command is the

option

that tells tar that the file has been gziped. The second

example is the gzip command, using the d option to decompress

file.gz. gunzip is a command that is equivalent to

gzip -d, but more intuitive.

bash-2.05$ gunzip file.gz <ENTER>

To compress a tar files, or any file, using gzip,

you would type:

bash-2.05$ gzip -9 file.tar <ENTER>

The -9 means best compression. There are many options available for

gzip. A quick browse through the info pages will tell you

everything you need to know.

Another common form of compression is the bzip2 format. tar

archives

compressed using bzip2 usually have the extension .tar.bz2.

This format is known to be one of the best compression utilities.

To extract a bzip2 file you would type:

bash-2.05$ bunzip2 file.bz2 <ENTER>

Like the gzip format, the bzip2 format has many options.

You will probably see bzip2 compressing tar files. The

bzip2

utility many other commands linked to it. To extract a file that has been

archived with tar and compressed with bzip2, you would

do something similar to the following:

bash-2.05$ bzcat file.tar.bz2 |tar -xv <ENTER>

The bzcat command is a combination of bunzip2 and

cat.

We bzcat the file, then pipe(|) the file to the tar

command.

Once again, I use -x to extract the file, and -v to see its

contents.

To compress a file using bzip2 you would type:

bash-2.05$ bzip2 -z file1.txt <ENTER>

This command compresses file1.txt to file1.txt.bz2. You can

also use

the '-9' option for best compression.

Hopefully, this little chapter has gotten you more interested in using

GNU/Hurd.

If you are still confused, please read the info pages for the command that's

giving you trouble. The command info will give you a list of all the

programs on your Hurd machine that have documentation with them.

The best way to learn GNU utilities is to read and practice. GNU/Hurd is

just like music or sports: you can never learn enough.

This chapter is meant to give you a stepping stone to freedom.

After the last chapter, you're probably wondering about this "root user" that everyone is so fond of. The Hurd is trying to get all things root out of the picture and allow you the freedom of doing things only root can do on legacy Unix systems. The designers and developers want you, the user, to be able to do things that you cannot do on a traditional Unix-like operating system.

For the time being, though, you will have to deal with the root user.

One reasonable request of the Unix-type gurus is making a normal user account. A normal user can only write to his or her home directory, and has limited access to system configuration. The reasoning behind this is that a normal user can not mess up the computer. This is a decent compromise because the more we explore and play, the more likely we'll lose a important file or make some other fatal mistake. So what we'll do is set up a user account for you. You must be root to do this.

bash-2.05# adduser <ENTER>

That's it! The computer will prompt you for a username and password, then you can just answer yes or whatever you require to the rest of the requests. To change the password on your account you just created you would type:

bash-2.05# passwd <USER_NAME> <ENTER>

Please Enter a Password:

There is a command that helps you become root while logged in as a normal

user.

This command is called su. When you type su, you are

required to enter a password (if you have one) for the root account. If a ssh (Secure Shell) server doesn't allow root logins, as root

you can su to a another user that has an access to that server.

When you are done being another user you type exit and your

back as root.

bash-2.05$ su <ENTER>

password:

There are many commands related to the Administering Unix-like operating systems. As a normal user, much is unneeded. Making a new user and giving him/her a password is enough for now. If you are interested in becoming a Administrator there are plenty of books and classes out there.

Traditionally, Unix-like systems have used a command called mount

to merge removable storage devices, such as CDs and floppy disks, into the

file system. On GNU/Hurd, we don't mount anything. The Hurd has

a similar mechanism for accessing devices called "setting a translator".

We use the settrans command to do this. settrans is a

program that aligns a type of Hurd server called a translator to a

device supported by the kernel. For instance,

on my GNU/Hurd system my cdrom device was detected as hd2. To get to my data

on a iso9660 CD, I first had to make the device. Then I had to run the

settrans command. This had to be done as the root user (root is the only user that has these privileges).

The term iso9660 is a standard set by the Industry Standards Organization.The ISO has set the universal access to CDROM media as standard number 9660. Some systems use an extension to this standard called Joliet. The Joliet extension is typical of Microsoft Windows. It is also supported on GNU/Hurd, GNU/Linux, and the BSDs.

bash-2.05# cd /dev <ENTER>

bash-2.05# ./MAKEDEV hd2 <ENTER>

bash-2.05# settrans -ac /cdrom /hurd/isofs /dev/hd2 <ENTER>

This isn't too hard, is it? Those options after settrans are very

important.

The -a makes the /hurd/isofs translator active. The -c

creates the translator, and is only needed the first time.

Now you can type cd /cdrom and see the data on your CD.

This section will help you get some data off of your floppy disk. I note that

the vfat (fat32) and msdos (fat16) filesystems are currently

not supported. The support will come eventually, but for now you can only

access

GNU/Linux floppies. You must be root to get to your floppy.

joe@hurd# settrans -a /floppy /hurd/ext2fs /dev/fd0 <ENTER>

Now you can head to the /floppy directory and see your data.

On Unix-like operating systems, it has always been a struggle for new

users to get their hardware configured. Unix-like systems have a

command called dmesg that allows users to view what hardware

the kernel has detected.

The command dmesg spits out all that data that we see at boot to your

terminal. Unfortunately, the dmesg command has not been ported to

GNU/Hurd. It should be ported in the near future. For now

we have to use a couple of GNU programs to get equivalent information:

bash-2.05# cat /dev/klog > dmesg & <ENTER>

bash-2.05# less dmesg <ENTER>

As a user gets used to Unix-like systems, they should have a decent idea what they have in their systems. This section should help new users discover some things, and hopefully educate to some extent.

A typical computer has many common pieces of hardware regardless of vendor. A computer has these things in common:

Some systems have some of these pieces missing for certain reasons. Typically the missing pieces are omitted because of cost, the hardware being unneccesary, or other specialized reasons.

If your computer came with a printed manual, that manual will be very helpful; however, it will probably not tell you everything that you need to know. A person with their very own screwdriver can open up a computer and view some of the hardware. Just by looking at a certain card, you can get a lot of important information. On modern computer hardware, vendors are doing a better job at labeling their products. This is a great thing now that we have the Internet and search engines. Now, a person can write down some things that they see on a certain computer card, type that information into a search engine, and, in a matter of seconds, find out everything they need to know.

The motherboard lies at the heart of your computer's hardware. The name "motherboard" comes from the fact that all other pieces of hardware are connected to this board.

The motherboard is a large circuit board. It will have slots in which you may add other chips, such as a video card or a network card. When you see a computer advertised, and the advertisement mentions the number of expansion slots the motherboard has, it is referring to these slots.

The motherboard also has slots for RAM (memory) chips and CPUs. The number of slots for RAM chips determines the extent to which the computer's memory is upgradeable. As for CPUs, if your friend tells you that her computer is a dual-processor machine, that means that the machine's motherboard has slots for two CPUs, and that there is a CPU in each slot.

Other pieces of hardware that connect to the motherboard are power supplies, cooling fans, and disk drives. Together, your computer's hardware provides you with a platform on which you can run a complete operating system.

The motherboard has many chips all connected by SMT(surface mount technology) wiring. SMT is a technology that is used to connect tiny wires, transistors, diodes, capacitors, etc. The components collectively communicate with the CPU, Devices, and the BIOS. The Motherboard uses a IRQ(Interrupt ReQuest) controller to know which devices can interrupt the CPU to send of receive data. For instance a Harddisk will have lower IRQ number than a keyboard. The reasoning behind this is the keyboard is constantly being used whereas the harddisk only gets written to or read from periodically. A typical motherboard has 14 IRQ's, newer ones are giving the user more.

Users need not be afraid of working on their computer. In the modern computer age manufacturers have made it very easy for us. Everything is like a puzzle piece. Almost no piece of hardware can be put in upside down or reversed. The hardest part of it all is the cabling that goes from the motherboard to the disks( Hard-drive, Floppy, Cdrom ).

When taking apart your computer for the first time, it is always good to have a magic marker or some sort of writing utensil handy. It is a good idea to mark your cables as you pull them out just in case you have to stop working ,for example, to take out the dog. When you get back to to what you are doing you'll have some idea of what that cable belongs to. Another important thing to remember is on the cables that go to your internal devices always have a red line representing pin one. You should never try to do two things at the same time, this could make things more confusing.

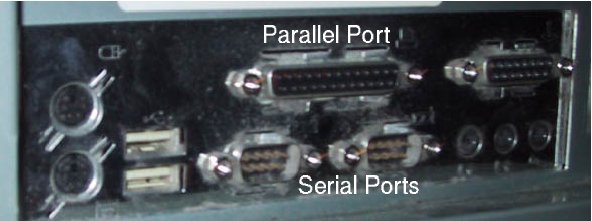

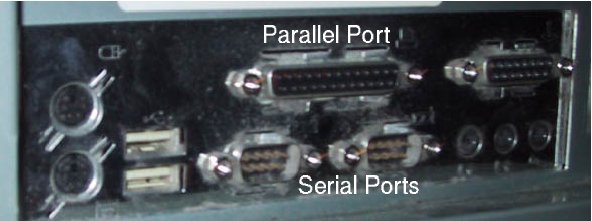

An internal device is usually a hard disk drive, floppy disk drive, CD drive, video card, network card or other important add-on. The disk drives are connected to the motherboard with ribbon cables. The video card, network card, and possibly a modem are inserted in slots on the motherboard. Slots are called PCI, EISA, and AGP.

The AGP (advance graphics port) slot is for video cards only. EISA (Enhanced Industry Standard Architecture) slots are for older 16-bit devices. You won't see that many EISA slots on todays motherboards. More favorable are PCI(Peripheral Component Interconnect) slots these are the most abundant on todays motherboards because they are for 32bit devices. The PCI slots are usually beige in color and are smaller than the longer black near obsolete EISA slots.

The cabling connecting the disks to the motherboard are considered IDE(Intergrated Drive Electronics) ribbon cables. The motherboard also has a special cable for your floppy disk drive. Both the ide and floppy controllers should be labeled in very small print on your motherboard. The controllers should also have very small numbers on the ends to tell us where pin one is. The cables will have a red line to match pin one on the controller to pin one on the disk drives. If your lucky your disk drives and cables will be brand new so none of this will matter and they will only be allowed to connect the right way.

Each IDE controller will allow you to connect two devices. The two devices are considered master and slave. The master is always the first or only drive on a IDE controller. Master drives are usually bootable and contain the operating system's bootloader and kernel. A slave drive can be a CDROM or another Hard disk. Some operating systems are fussy about where they reside. It is generally a good idea to have a operating system booting off of a master harddrive. This isn't written in stone, Alot of software developers will have multiple operating systems on a single computer. Yet they usually put there bootloader on the master ide drive along with a couple of operating systems.

The motherboard usually has two IDE controllers; a primary and secondary. We are allowed a total of four IDE devices cabled off of our motherboards. Some of the newer motherboards are being manufactured with up to four ide controllers. A typical machine might have one cable connecting a hard disk off of the primary ide contoller. The machine could have the cdrom drive connected with a cable to the secondary ide controller. The machine might even have the cdrom drive connected as a slave on the primary ide controller. A very important way of connecting these devices this way are small jumpers on the disk drives. There should be clear instructions on the disks drives on how these jumpers should look for a certain configuration. The jumper selection area on the hardisk should look similar to the figure below.

MASTER SLAVE CABLE SELECT

|----------|

| o o o |

| o o o |

|----------|

If your new disk is going to be a master on the secondary ide controller **,** you need to make sure these jumpers are set correctly. If the jumpers are wrong and your cables are backwards you computer will not work the way you expect it too.

The devices connected through slots, and disks connected to the controllers all end up communicating with the CPU. These devices communicate via the "bus". The term "bus" comes from the fact that the data is sent down wires on the motherboard to the CPU all at once. You sometimes hear the term "I have a hundred megahertz bus"; this is the speed the data is traveling down the bus to the CPU. Megahertz(MHZ)is also the measurement of speed of a CPU. A CPU can be found in a slot on the motherboard. The CPU is either in slot form or in a ZIF(Zero Insertion Force)socket. Their are different types of ZIF configurations for certain CPUs. The ZIF sockets are usually labeled socket 8, socket 7, socket 3, etc. The slot based CPU are primarily for Pentium II and Pentium III CPUs. The very new CPUs use a new ZIF configuration called PGA(Primary Grid Array). The PGA sockets are usually for the Athlon and Pentium 4 processors. A ZIP socket makes it very easy to replace the CPU. With a little lever on the side of the socket a CPU can easily be replaced. All of these configurations regardless of CPU have heat sinks with fans built into them. The fan and heat sink play an important role. The heat sink keeps the CPU from burning up and overheating your computer. If you try and use a computer with out a working fan or without a heat sink your likely to run into sporadic errors. The errors can range from software errors to the computer restarting by itself.

While data is being worked on by the CPU the machine also uses RAM to store temporary data that the machine needs. RAM is one important component that allows us to use a computer that multi-tasks. The term multi-tasking is simply doing more than one thing at the same time. We should also know that if we don't have enough RAM for what we need to do, a computer will not multi-task efficiently. RAM is mounted on the motherboard in DIMM and/or SIMM slots. The RAM can only go in one way. A typical problem a person can have with RAM is not putting it in completely. Another problem is pushing the RAM too hard and cracking the motherboard. If either situation happens, when you start your computer you will get no picture, beeps, or any response at all. A DIMM stands for Dual Inline Memory Module. The DIMM is the most typical of newer mother- boards. The SIMM which stands for Single Inline Memory Module is not seen as much as the DIMM. The SIMM has a slower speed than the DIMM. The SIMM is seen on most older motherboards but they can both coexist together. The problem with the two different RAM Modules coexisting is the SIMM can only work with 66MHZ bus systems. If you have a DIMM that is rated for 100MHZ systems they will default to 66MHZ to live peacefully with the other RAM. When the bus speed is defaulted to 66MHZ, this can actually change the CPU speeds on some motherboards. Other differences between memory modules are the pins. A DIMM should have 168 pins while the SIMM will only have 72 pins.

The BIOS is another good place to find information about the computer's hardware. The BIOS is the motherboard's little brain. It holds the data in the machine's CMOS (Complimetary Metal Oxide TranSistor) which is sort of like long term storage. The programming in the CMOS of the BIOS holds data such as hard disk information (Primary or Secondary, Master or Slave, Heads, Sectors,etc). It also holds the date, time, and some RAM-related information. A user can access the BIOS only at boot time. Usually there is a message telling us to hit F1,F2 or possibly Delete to enter setup. There are many confusing options in the BIOS. A good idea is to have the manual for your motherboard when dealing with any unknown features of the board. If a user changes some thing that causes havoc on the machine there is always a option to restore the settings to factory default. This can be a savior if we change too many things and forget what we did.

A computer's power supply has one connector that powers the mother- board. The motherboard's power connector is a large white block with yellow, black, and red wires. The other connectors from the power supply are smaller D-shaped, with the same colored wires. The yellow wires are rated +-13 volts. The red wires are +-5 volts and the black ones are ground(0 volts). Both the motherboard and internal device connectors cannot be put in backwards or upside down. One common problem is not pressing the power connectors in completely.The floppy disk drive has it's very own power connector that is also keyed (think key and keyhole) to go in correctly. If your power supply is running hot or overheating, this can cause your machine to reboot and occasionally lock up. To figure out if this is the case one can simply touch the back of computer, right above the power supply. If it is hot to the touch this may be the clue you need. Power Supplies are also coming in different watt(240 thru 400) ratings. CPU's from different manufacturers require specific power requirements related to power supply and motherboard. These require- ments can sometimes add confusion when upgrading your system.